The New Direction

It is the Spring of 2025. Everyone at work is now using ChatGPT, for better or for worse, including myself.

Unwinding after a long day spent deciphering whether pull requests or Teams messages were authored by ChatGPT or real people, I turned on the the NFL draft and quickly had an epiphany.

In hindsight, it was probably the least unique or interesting epiphany one could have given the particular moment in time.

“What if I just had ChatGPT to do it for me?”

“It”, of course, being the heavy lifting of reviving my beloved yet decrepit Fantasy Football Factory, so it can finally meet it’s full, graph native potential.

What if I simply told ChatGPT what I wanted from this knowledge graph of the NFL I had been building?

Would it then just… write the perfect Cypher query?

Introducing: The Fantasy Football Oracle

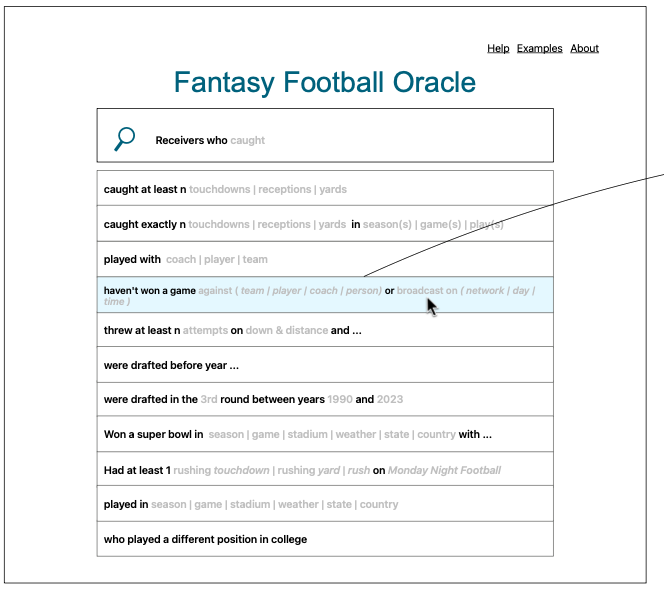

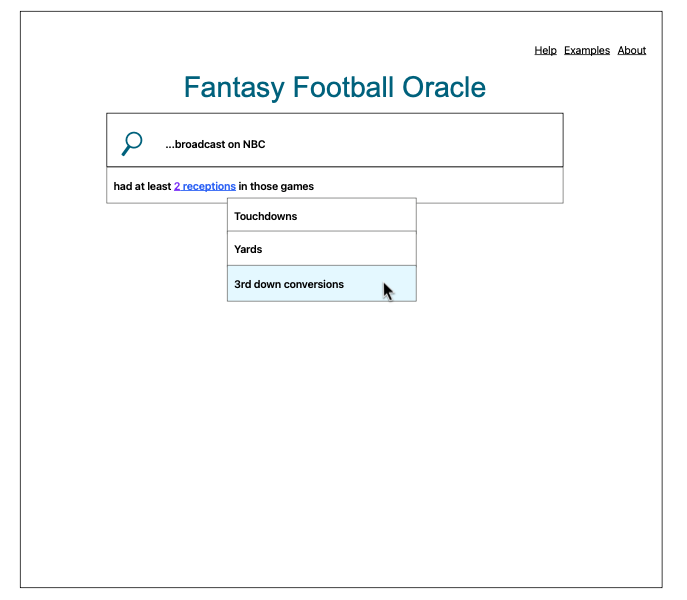

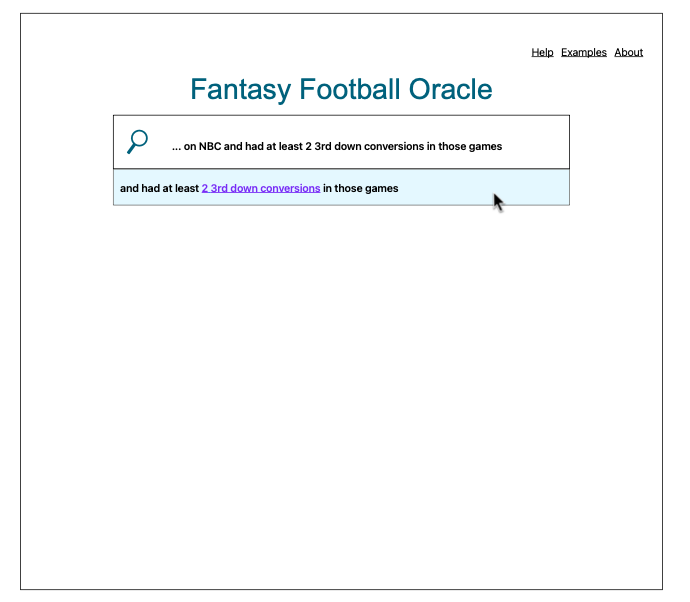

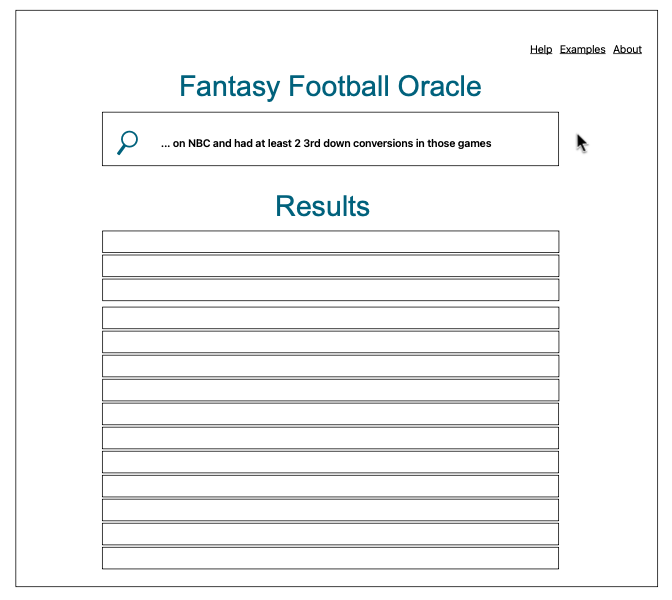

Fueled by the possibilities that an LLM could bring to my abandoned passion project, I sketched up a UI one night. The world was my oyster.

The idea was simple: “Google for deep-dive football stats”, with one important tweak.

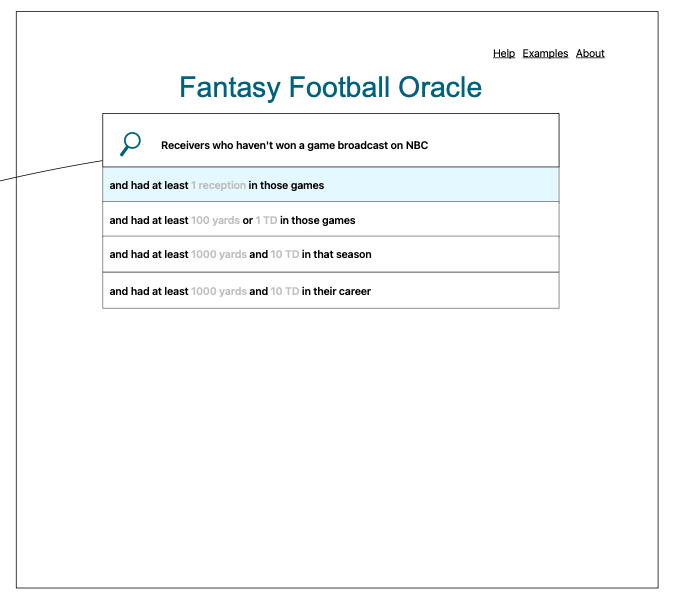

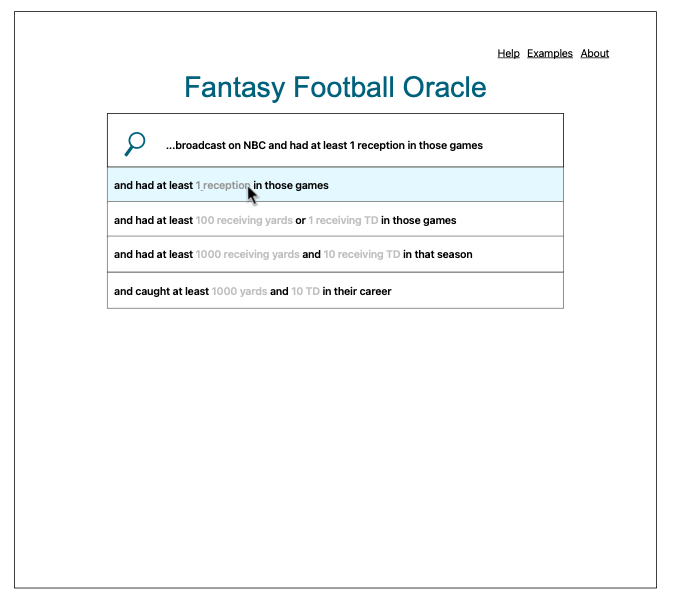

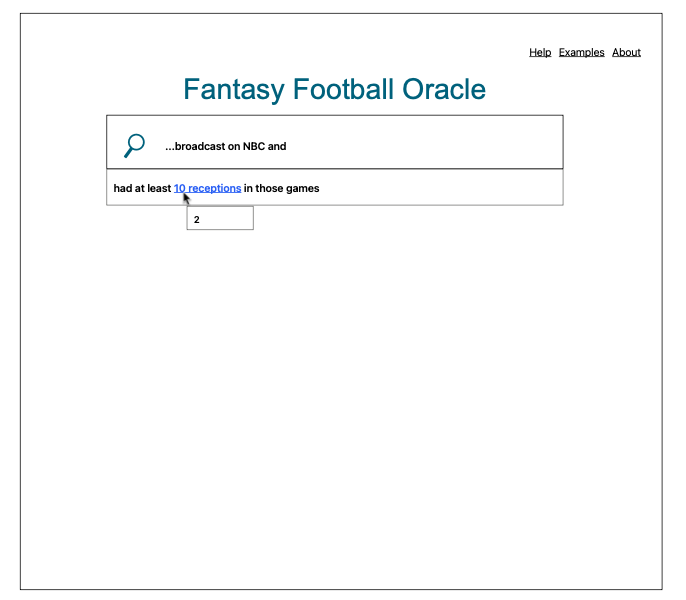

Instead of executing a search when you select a suggestion, you can optionally just add the suggestion to your query to get more relevant suggestions.

The Search UX

- Type something

- Get Suggestions

- Select a suggestion

- Edit a suggestion (optional: for numeric info like stats)

- Repeat

- Search with the query you built via The Oracle’s suggestions

The UX emulated a pattern I thought would be very familiar to anyone who has used Google.

So…everybody?

But I was getting way ahead of myself. I had to validate my idea before I built it.

Let’s just start with the search bar. We’ll tackle suggestions later…

User Research: Instagram Edition

I’ve always enjoyed user research.

I was lucky enough to spend most of my career on teams that took user research very seriously.

After seeing real users in your target demographic take all but 10 minutes of a Zoom call to unwittingly expose just how little you understand about your own application, it becomes an essential part of any development process.

All that to say, I had a blast doing some DIY user research on Instagram.

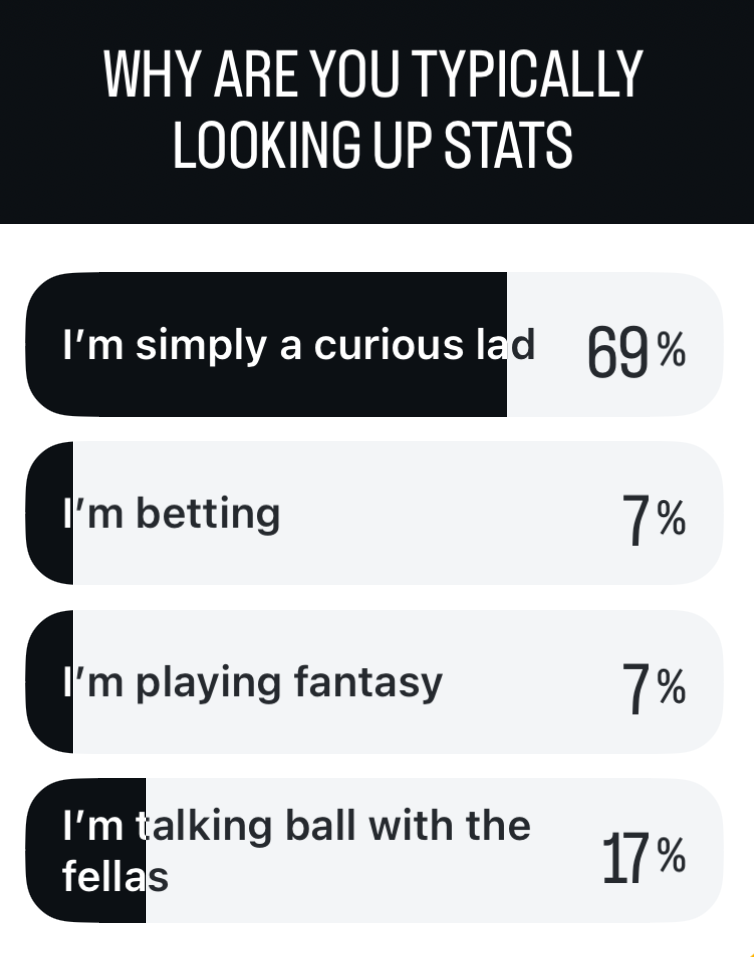

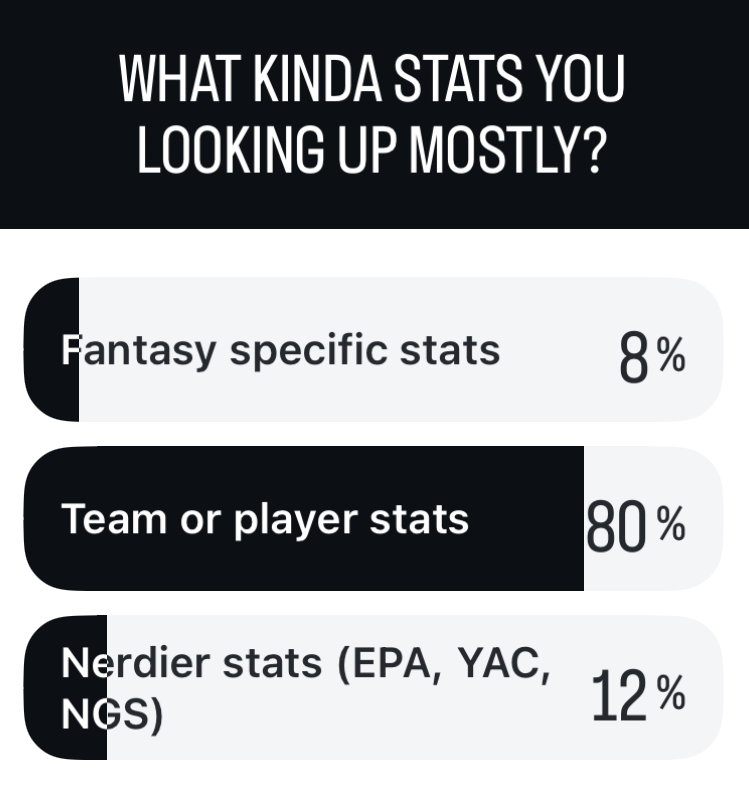

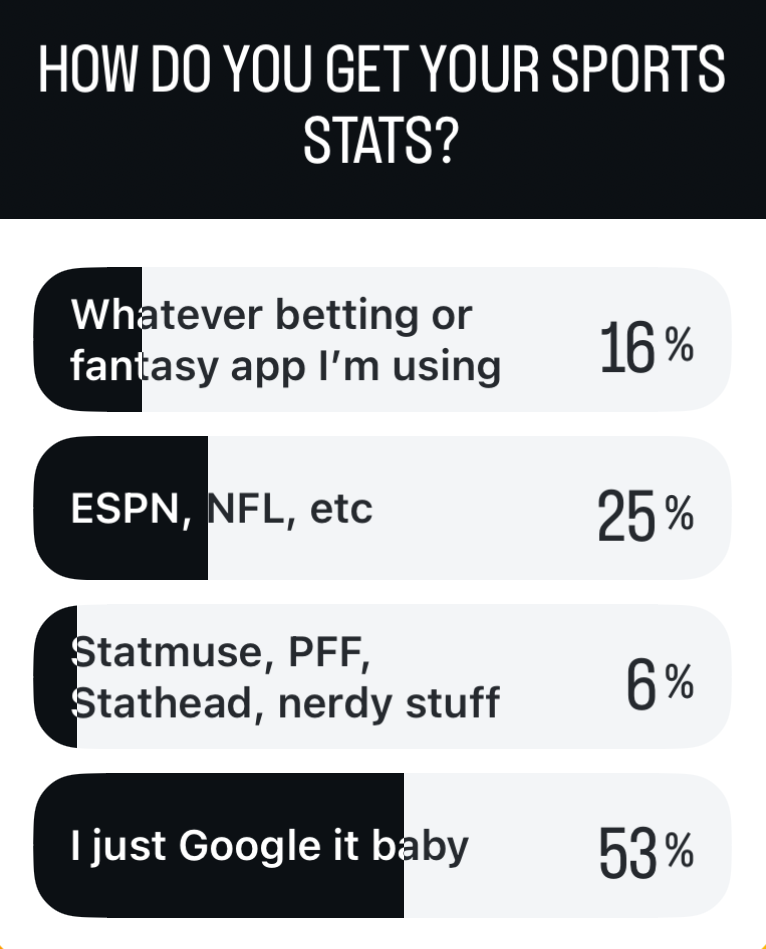

I asked the following 4 questions over the course of a couple days and got a not-insignificant amount of responses (30-50 per question). To increase feedback, the questions were asked about “Sports” statistics in general, not specifical the “NFL”.

Takeaway: Curious Lads just Google it, baby!

In hindsight, I thought this was a lot more validating for my Football Oracle design than it actually was.

- Yes, the single search bar was a familiar UX pattern

- Yes, people look up stats simply out of curiosity.

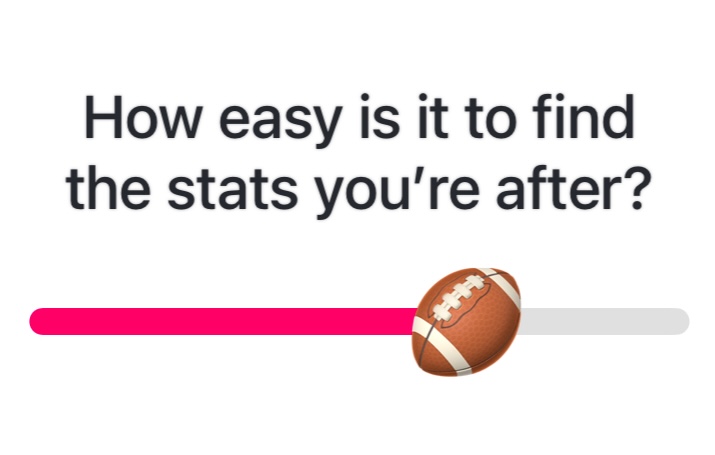

While this boded well for my design — a simple Search Bar driven UX, I completely overlooked the slider that said “You know, finding stats is not so bad actually…”.

And crucially, these weren’t really the users I should target.

My goal was never to build for the “Everyone Who Follows Me On Instagram” demographic.

But it didn’t matter. I had made up my mind. I was going to do this thing, and knowing that most people are simply Curious Lads who just Google it, baby, was enough validation for me to build the Oracle.

Authors note: Eventually, I moved off the “Oracle” branding. This was partially due to domain availability as well as the fact that “Oracle” is actually a pretty bad confusing metaphor for what the app does, which is explicitly not to predict the future, but to drudge up the past instead.

From RAGs to Riches?

So I got my OpenAI API key and I was off to the races.

Before I even knew what RAG was, I had a dead simple, no frills RAG pipeline, injecting my entire (relatively small) Neo4j schema into my ChatGPT prompts.

sequenceDiagram

participant React UI

participant FastAPI

participant Neo4j

participant OpenAI

Note over React UI,FastAPI: App Startup

React UI->>FastAPI: GET /schema

FastAPI->>Neo4j: Fetch schema

Neo4j-->>FastAPI: Return schema

FastAPI-->>React UI: Cache schema

Note over React UI,FastAPI: Search Flow

React UI->>FastAPI: POST /search (with search text)

FastAPI->>OpenAI: Send prompt (schema + user text)

OpenAI-->>FastAPI: Return generated Cypher query

FastAPI->>Neo4j: Execute Cypher query

Neo4j-->>FastAPI: Return query results

FastAPI-->>React UI: Return results

It was really cool, amazing even! I could ask it multi-hop queries in plan English, like

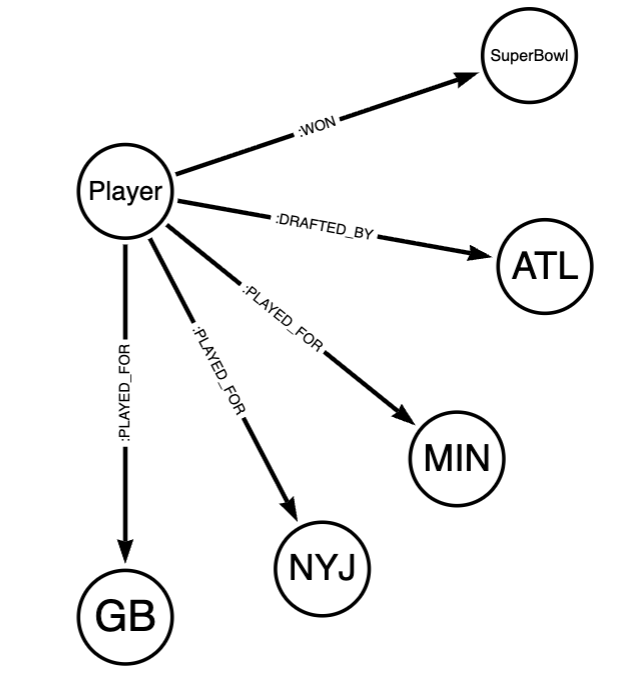

“Which Super Bowl was won by a QB who played for the Packers, Jets and Vikings but was drafted by Atlanta?”

and it would usually return several lines of valid Cypher, neatly traversing Player, Game, Team and Draft nodes to provide real answers backed by real data!

But, if I asked a question more than once, it would return a slightly different query… sometimes.

I was starting to question the scalability of this.

If I had thousands of users how would I ensure they all have the same experience? Not to mention the token economics of using ChatGPT at scale like this…

At the end of the day, even though I had provided ChatGPT with the schema along with a note explicitly stating that this was THE ONLY SCHEMA it still hallucinated labels, relationships, and worst of all, my intent.

Serendipitous Example of Intent Hallucination:

Just now, I asked ChatGPT (well, Claude technically) to write Cypher for the prompt we just mentioned.

The response looks great at first and it would run and return seemingly valid data.

However, it wouldn’t return the correct answer, Super Bowl XXXI, because it wouldn’t find the correct QB, Brett Favre.

Even those unfamiliar with Cypher syntax may be able to spot the flaw below.

Again, here’s the prompt:

“Which Super Bowl was won by a QB who played for the Packers, Jets and Vikings but was drafted by Atlanta?”

// Find QBs drafted by Atlanta who played for specific teams and won a Super Bowl

MATCH (qb:Player)-[d:DRAFTED_BY]->(falcons:Team {name: 'ATL'})

WHERE qb.position = 'QB'

MATCH (qb)-[p:PLAYED_FOR]->(team:Team)

WHERE team.name IN ['GB', 'NYJ', 'MIN']

WITH qb, count(DISTINCT team.name) as teamsPlayed

WHERE teamsPlayed = 3

MATCH (qb)-[:WON]->(game:Game)

RETURN qb.name, game.name, game.dateTecnically, Brett Favre also played for ATL. But that was implied, not explicity stated.

ChatGPT, for whatever reason, decided to look for QBs who played for 3 teams and 3 teams only. GB, NYJ and MIN.

User Testing

Now that the prototype was up and running, I asked a friend of mine try it out.

I’m glad I did, because the absurdity of the questions he’d ask confirmed my new apprehensions.

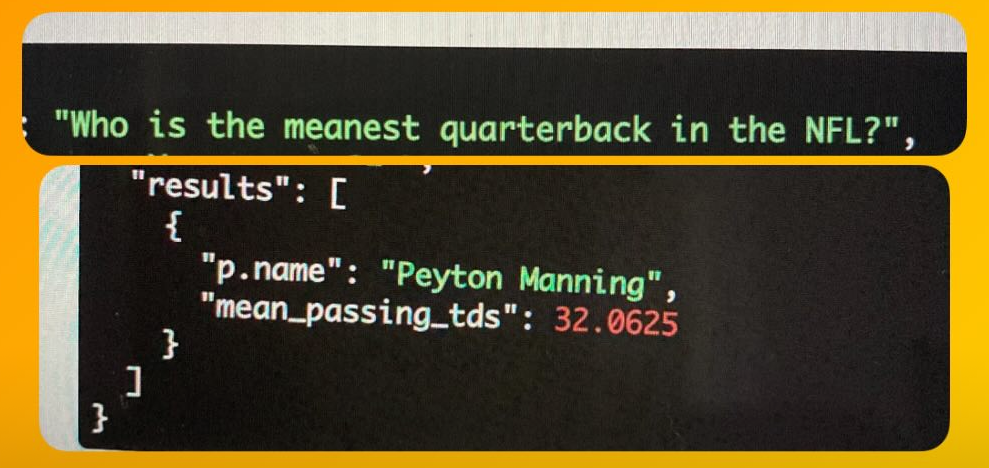

The question that tore the wool from my eyes:

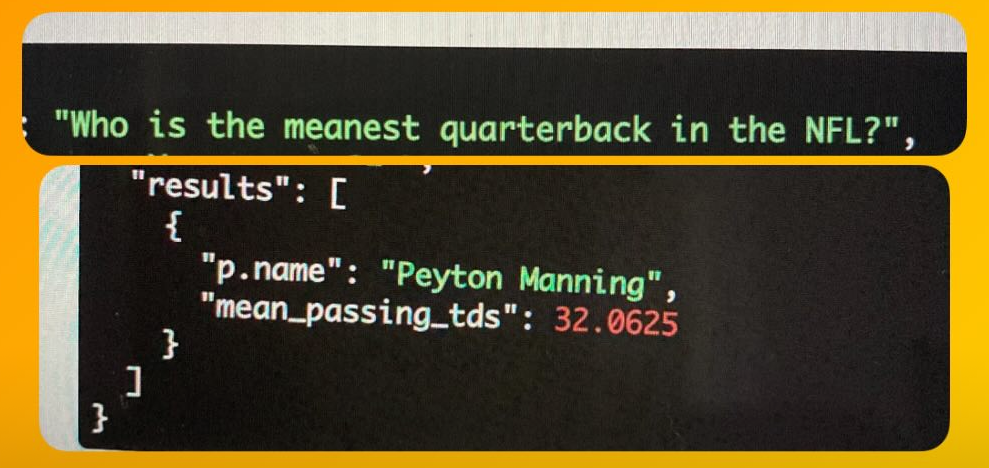

“Who is the meanest quarterback in the NFL?”

Peyton Manning - the MOAT

And sure enough, like the good little LLM it is, ChatGPT dutifully generated a Cypher query despite all common sense.

I wish I could find the Cypher query that was generated, but I can tell you it was at least 40 lines long and had several aggregations.

I think it interpreted “meanest” as “the most mean”, AKA average.

Naturally it decided to determine who the most average quarterback in the NFL was by determining which QB has the highest average (“meanest”) passing touchdowns, presumably over a season, but we can’t quite really be sure.

It also did not once check for unsportsmanlike conduct penalties or sideline tirades.

I know this because I hadn’t indexed that data (yet)

Takeaways

- Writing valid and schema-correct Cypher is only half the battle

- Extracting intent is much harder.

- Validating intent is even harder than that.

- If there is a million ways to ask one question, then there is also a million ways to misinterpret it.

Lingering Questions

- What if we implicitly meant “meanest, currently active QB”?

- How explicit do we need the users to be?

- How explicit do the users expect they need to be?

- Should we expect users to know how to optimize their prompts for us?

- No, probably

- How do we guide users toward “askable” questions?

- Can you sanitize input for…intent?

- At what point does the prompt engineering effort outweight the benefits of using an LLM?

- How do we balance flexibility with accuracy?

- How do we catch less obvious hallucinations?

- How do we validate the LLM’s interpretation of the data?

- Since then, I have heard good things about BAML?

Answers: Back to the Foundry

When they zig, Medcalf Software Solutions zags.

No more AI. Just chunks. Of Queries. And chains. And chains of chunks of queries chained together.

Stick around for Part 3 where I pivot to good old deterministic systems and design quite possibly the first Dynamically Generated Schema Aware DSL For User Friendly Graph Native Query Composition

Or DGSASSLFUFGNQC for short.

I never said I was good at branding.